Stop guessing how LLMs use your MCP server.

No more scrolling through logs to reconstruct MCP usage.

Inspectr makes Model Context Protocol tool calls, prompts, resources, and tokens observable across Claude, OpenAI, ... with one command.

Understanding real MCP usage is harder than it looks

An MCP server can work functionally correct during development and still be difficult to understand once it’s used by real LLM clients.

In practice, teams rely on logs to understand what happened; but logs make it hard to reconstruct actual LLM behavior.

Questions like these are difficult to answer from logs alone:

- Which MCP calls are actually made by the LLM/Agents?

- What MCP tools, prompts, or resources were requested, and what output were returned?

- Which flow or sequence of tool calls did the model follow?

- How many tokens were consumed during a conversation?

The challenge is no longer if the MCP server works; but understanding what the LLM actually did.

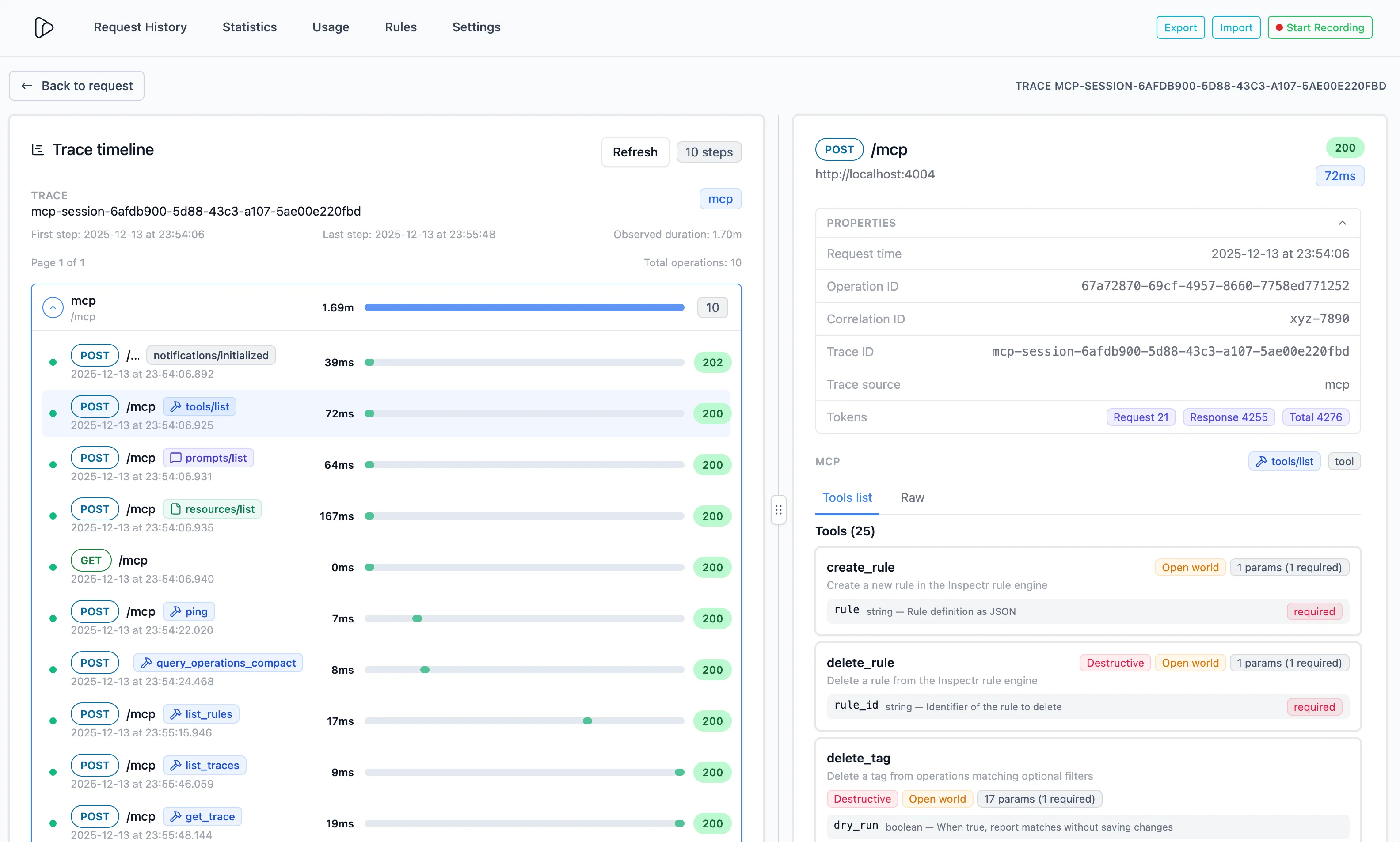

Inspectr provides MCP insights

Flow

Inspectr runs as a transparent, pluggable proxy in front of your MCP server. It captures and understands MCP traffic in real time without requiring any code changes.

Full capture of MCP requests

See exactly what was sent and returned across every MCP operation.

MCP flow visibility

Follow the real execution flow, not fragmented log lines.

Call classification

Know whether tools, prompts, or resources were invoked.

Token usage estimates

Identify expensive flows and unexpected token spikes.

Exportable JSON sessions

Share, review, or investigate MCP sessions offline.

Guided MCP analysis

Surface patterns, anomalies, and behavior changes fast.

MCP insights in practice

MCP tool list

MCP tracing flow

MCP Tool call

Token usage

MCP request details

Get MCP visibility in seconds

Works with Claude, OpenAI, and other MCP clients. No SDKs, no instrumentation, and no server changes.

npx @inspectr/inspectr --backend=<https://your-mcp-server:3000>

Drop-in for local development or remote testing with a single command.

Build MCP servers with confidence

Understand real MCP usage before it reaches production and gains insights in LLM behavior.